There are a few memes/popular posts doing the rounds these days that supposedly prove whether or not you could be a psychopath.

The first one is a bit of fun:

“While at her own mother’s funeral, a woman meets a guy she doesn’t know. She thinks this guy is amazing — her dream man — and is pretty sure he could be the love of her life. However, she never asked for his name or number and afterwards could not find anyone who knows who he was. A few days later the girl kills her own sister – but why?”

Now, there are surely plenty of ways one could solve this puzzle, but if your answer was because the girl thought the man would show up to the second funeral… you may be a psychopath.

Well, actually that’s not the case. The above riddle hasn’t actually been substantiated in any way. The second riddle below, however, comes from an actual questionnaire in many studies… and is one you may have seen on these humble pages before.

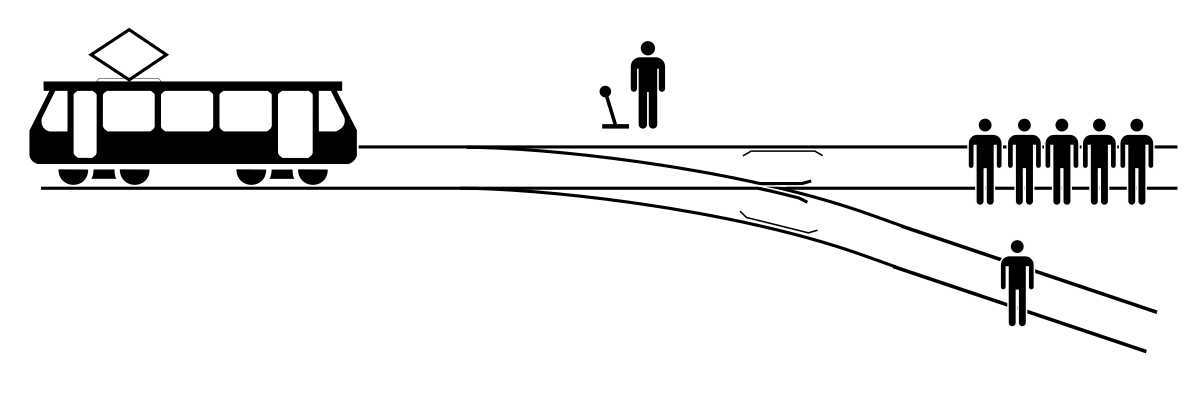

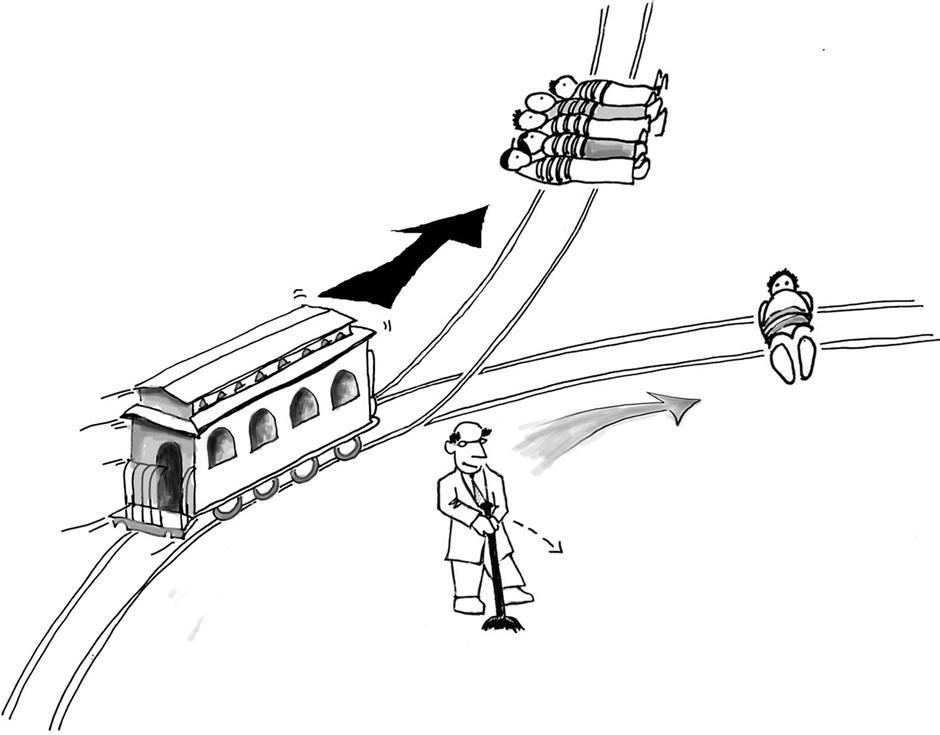

It’s called the Trolley Dilemma and it is an excellent thought experiment in ethics and psychology… as well as a good introduction into the ideas of utilitarianism.

It essentially goes like this:

“A runaway trolley is about to run over and kill five people and you are standing on a footbridge next to a large stranger; your body is too light to stop the train, but if you push the stranger onto the tracks, killing him, you will save the five people. Would you push the man?”

(Keep in mind, your response may be one indication that you are a psychopath…)

Now… the inherent value of philosophically trying to solve ethical issues should hopefully be obvious. After all, this is a site dedicated to wisdom! The ancients would have most definitely approved of the exercise for its own sake.

But we do live in a very practical world… so for those who want to take thought experiments out of the classroom and onto the streets, we can do that very easily with this one… especially with regards to modern technology.

Variants of the original Trolley Driver dilemma arise in the design of software to control autonomous cars. Basically, programmers can choose for a self-driving vehicle to say… crash itself… if that means not driving into a crowd. The issue, of course, is that it might sacrifice the driver – or the entire car’s occupants – in the process.

This dilemma – as well as the original trolley version – can have infinite levels of complexity. Add in factors such as the health and age of the individuals involved, or their role and contributions to society (imagine one of the folks was Albert Einstein, for instance, or Hitler…), and you have a right mess of a philosophical debate.

So with that in mind, we’d like to turn it to you, dear reader.

Do you turn the switch? Do you purposely crash the car? What if it’s you who is driving it? How do you solve the Trolley Dilemma?

As always, you can comment below or write me directly at [email protected].

One comment

Do nothing. It’s not your fault. You have no moral responsibility to strangers, nor do you have the moral right to sacrifice a stranger. Maybe the stranger should jump to save them. There are a myriad of ‘but what if’ alternatives one can think up to try to force a different result.

Years ago if I remember correctly Ayn Rand put the scenario of a man, his wife and child in a situation where the wife was in a position to save her husband or child: she said sacrifice the child, you can have another but if you love your husband he is your greater value.

Isn’t it a case of value and responsibility?

The ‘good Samaritan’ made a moral choice to help, but the injured man had no right to be helped.

Our apologies, you must be logged in to post a comment.